HAMERKOP’s Test Flight With AI

Saying Hello to Artificial Intelligence at HAMERKOP and in the Climate Finance Industry

Author: Jit Ping, Spring 2024

The AI (Artificial Intelligence) wave has taken the world by storm and no person or industry is spared from its effects. The climate finance industry is well-positioned to leverage the powers of AI to deliver greater value for all stakeholders involved. Indeed, we are not too far away from a world where AI can evaluate the feasibility of various carbon projects and recommend new projects in locations we have not previously thought of.

By way of introduction, I am Jit Ping and I embarked on a two-month internship earlier this year as an AI research intern to find out how HAMERKOP and the broader industry can utilise AI today and in the future. I am happy to share in this blog post a few insights from my time with HAMERKOP. (click here to learn more about my internship experience!)

What is AI?

AI broadly seeks to perform tasks that require human intelligence [1]. Satya Nadella, Microsoft CEO, summarises AI succinctly when he notes that AI is not just about delivering information to users but “about intelligence at your fingertips or expertise at your fingertips” [2]. Indeed, AI has been proven to have strong potential in detecting various cancers earlier and more accurately [3]. AI’s life-altering capabilities extend beyond the individual to the entire world. AI is being used to determine the pace of iceberg melting and where to focus ocean cleanup efforts [4].

LLMs (Large Language Models) such as ChatGPT, Gemini and Claude marked the introduction of AI into the public consciousness and generated strong public interest due to their ease of use and their potential to replace many white-collar office jobs we previously thought irreplaceable. The ability of LLMs to read and write is exemplified by the strong performance of GPT-4 on the bar exam typically taken by lawyers [5]. Crucially, LLMs can analyse and generate texts on a much larger scale than humans. For example, Anthropic's Claude AI can process lengthy novels like The Great Gatsby in a single query [6].

LLMs represent only a small sliver of what AI can do. AI’s greatest promise lies in the realm of machine learning [7]. Machine learning relies on computer systems interpreting data to find relationships and make future predictions [8]. The hype around machine learning stems from their ability to unearth patterns humans could never discover and their ability to train using a massive corpus of data. For example, machine learning algorithms can use software attached to wind turbines to help schedule preventive maintenance works before a breakdown occurs [9].

AI Use Case 1 – Reading & Writing Reports

The ability for LLMs to write is unparalleled and unquestionable. In fact, Japanese author Rie Kudan won her country's prestigious Akutagawa Prize for her book while revealing that “probably about 5% of the whole text is written directly from the generative A.I.” [10]. Experts believe that generative AI (which includes LLMs) has significantly improved technology’s ability to achieve human-like abilities in creativity and social-emotional behaviours [11].

While LLMs might be good in the realm of writing and generating ideas, are they as good in the business world? After all, HAMERKOP and most other firms are interested in precision and factual accuracy. HAMERKOP’s trial with LLMs suggests that while it is right most of the time, AI frequently produces undoubtedly wrong output. AI has made up numbers that do not exist in reports and has struggled to understand the full context of lengthy documents.

Indeed, the failures of LLMs to get basic facts right or understand basic instructions are well documented. LLMs are designed to be “probabilistic and sometimes unpredictable” [12] and are in reality just very good sentence generators. This means that their ability to solve 2+2=4 is not based on a fundamental understanding of mathematics and is not guaranteed. AI researchers term some of AI’s failures as “hallucinations”, such as when they contradict themselves in answers or make up facts that do not exist [13].

It is clear that LLMs cannot be autonomously given work to perform without any human intervention and guidance. However, there is still a role for LLMs in HAMERKOP and other companies. LLMs can be used to comb through lengthy documents to find a specific data point (or to refer to the relevant page where the information resides) and to synthesise chunks of texts into a neat table.

Even if LLMs can extract information well, their true promise comes if it can do it at scale. At present, most information on carbon projects resides in various monitoring and verification reports. However, the reporting of such information is not in a standardised format – sometimes the information is found as a mathematical symbol or is simply not present at all. Additionally, LLMs struggle with mathematical tabulation of values when verification reports overwrite or add-on to past information [14]. The goal of creating a system which can process documents en-masse while ensuring “cost, quality, and generality” is still an active discussion amongst academics [15]. Hopefully such technology will enter the mainstream in the not-too-distant future.

AI Use Case 2 – Making Quality Predictions

AI can overcome the two biggest stumbling blocks to the successful implementation of a climate project – labour cost and uncertainty. The most labour-intensive work in a climate project is often fieldwork. Reforestation projects require lengthy land analysis to determine the potential emission reductions and to monitor projects after their implementation. AI is used to analyse satellite imagery and can annotate the characteristics of an ecosystem and track any changes that occur in its make-up [16].

Projects require investments to operate, and investors require a good idea of the returns they can expect before they commit to any funding. AI can analyse vast swaths of past data to make predictions about the future. For instance, they can analyse the expected amount of credits generated from a project and the potential price each credit can sell for [17].

Indeed, a plethora of companies are entering the industry aiming to use AI to help companies implement their project from start to finish. For instance, some use a LLM to answer pertinent questions about the carbon market and about getting a project started. They attempt to provide softwares that allows project owners to receive funding, document project details and connect with other stakeholders [18]. Similarly, some others are working on a suite of AI software that would help with geospatial analysis, choosing the right methodology and writing project description documents [19].

The performance of AI models in tasks like land surveys and predictions ultimately depends on the quality and quantity of data used for training, as well as the training methodologies employed. However, developers are loath to disclose detailed information about their datasets or training approaches. As a result, AI models, particularly neural networks, often remain opaque "black boxes" because their internal reasoning and the process by which they arrive at specific outputs are not fully transparent or understood [20]. To enhance trust in AI model outputs, researchers would benefit from having access to detailed explanations of the models' decision-making processes and the ability to independently reproduce and validate the results.

Conclusion - Using AI vs Being Used By AI

The “Jagged Technological Frontier” of AI

It is clear that the central question for HAMERKOP and other businesses is not whether to use AI but rather how to use it well.

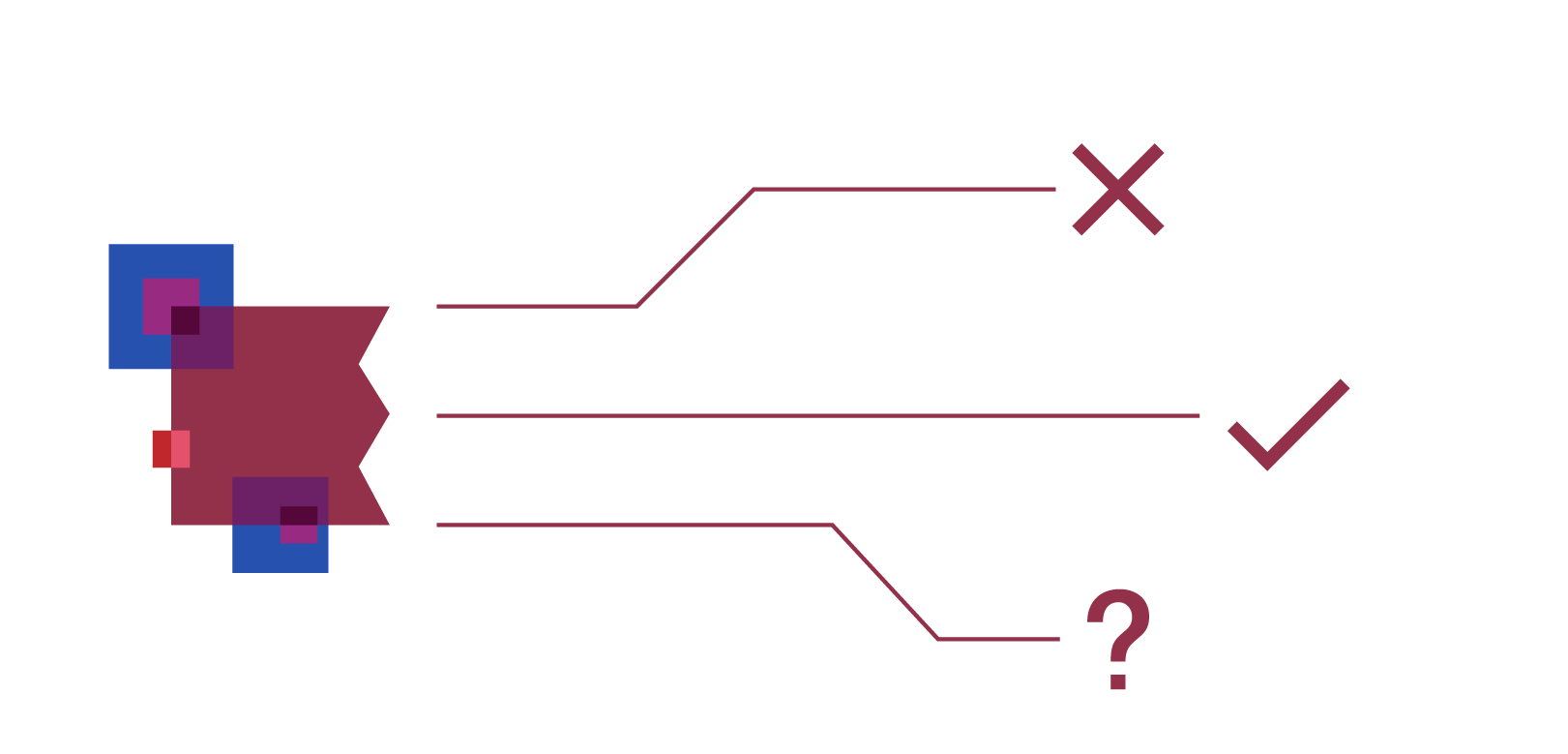

Training employees to be AI-ready is certainly the most important factor. Firstly, it is important for one to be aware of the limitations of AI. Reading this article alone would have made you aware that AI can invent facts and shouldn’t be blindly relied upon. Additionally, researchers at the Harvard Business School believe that there is a “jagged technological frontier” whereby tasks of similar difficulty are either achievable by AI or incapable of being completed [21]. Knowing whether a task is inside or beyond the frontier will ensure time is saved and not wasted when using AI.

Secondly, simple prompt engineering techniques can greatly improve the results that LLMs produce. For instance, Anthropic’s Claude LLM models perform over 30% better in multiple choice tests when the reference text is placed at the beginning of the prompt and not the end [22]. Training users in prompt engineering can ensure AI delivers more accurate information and in the format users expect.

The climate finance industry has only just embarked on its AI journey. To benefit from the vast potential of AI, firms need to embrace AI in its entirety – by empowering all employees with the skills to use the latest AI tools effectively.

[Disclaimer: Claude 3 helped with the proofreading of this article.]

References:

https://www.britannica.com/technology/artificial-intelligence

https://www.weforum.org/podcasts/meet-the-leader/episodes/davos-2024-conversation-microsoft-satya-nadella/

https://health.google/intl/ALL_uk/health-research/imaging-and-diagnostics/

https://www.weforum.org/agenda/2024/02/ai-combat-climate-change/

https://www.reuters.com/technology/bar-exam-score-shows-ai-can-keep-up-with-human-lawyers-researchers-say-2023-03-15/

https://twitter.com/AnthropicAI/status/1656700156518060033?lang=en

https://hammerspace.com/is-2024-the-year-of-the-enterprise-llm/

https://britannicaeducation.com/blog/ai-in-education/

https://plat.ai/blog/predictive-maintenance-machine-learning/

https://www.smithsonianmag.com/smart-news/this-award-winning-japanese-novel-was-written-partly-by-chatgpt-180983641/

https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier#work-and-productivity

https://medium.com/@glovguy/large-language-models-reasoning-capabilities-and-limitations-951cee0ac642

https://www.vellum.ai/blog/llm-hallucination-types-with-examples

https://bezerocarbon.com/insights/generative-ai-techniques-can-drive-standardisation-and-increased-transparency-to-the-vcm

https://arxiv.org/pdf/2304.09433.pdf

https://medium.com/@Gaurav_writes/machine-learning-for-forest-monitoring-algorithms-use-cases-challenges-4b9f3fb2e766

https://drive.google.com/file/d/1wq5612Ag1FlMwiFsYnAjEXOcN0HZXZVG/view

https://www.ivyprotocol.com/

https://www.nika.eco/carbongpt

https://towardsdatascience.com/why-we-will-never-open-deep-learnings-black-box-4c27cd335118

https://www.hbs.edu/ris/Publication%20Files/24-013_d9b45b68-9e74-42d6-a1c6-c72fb70c7282.pdf

https://www.youtube.com/watch?v=6d60zVdcCV4&t=1741s